I grew up playing with hardware, and as a boy, I was always underfunded when it came to my experiments. While I never tried to jump off a barn roof with an umbrella or bed-sheet parachute, I did try to build a free-wheeling cart out of a couple of 2x2s for axles, a thin sheet of plywood and baby-carriage wheels held on with nails. Needless to say, I didn’t get very far with that contraption.

My mother predicted that outcome, and that’s probably why she was confident in helping me carry my cart to the top of a hill. She knew intuitively that I wasn’t going to go more than 6 inches “before the wheels came off.” This early experiment in vehicle construction did, however, teach me a valuable lesson: No matter how good a machine might look on paper, you never really know what it can (or, in that case, can’t) do until you test it.

Old Lessons, New Relevance

I have carried that philosophy with me as I progressed from a teenage mechanic’s helper to an aircraft restorer, a pilot and eventual aeronautical engineer. Being in the operations and testing area of aviation, I naturally tend toward the greasy-hand part of machine verification and have always cast a sideways glance at anything that hasn’t proved itself in the real world—or at least a laboratory test rig. In days past, that is the only way machines and vehicles could be proved: You had to take them out and test them. Testing could be to one of various limits—operational, re-use or even ultimate conditions. In short, it was nice to know firsthand what you could do to something and still use it again—as well as where it was going to break!

With the advent of modern computer modeling, many engineering organizations have gotten away from the old testing techniques and are substituting analysis and modeling to predict when and how a piece of equipment (or an entire aircraft) is going to bend or break. They simply use the results of computer runs that say there is a certain amount of margin in the design, and since the models have been tested against real-world equivalents in the past, they trust that they will apply to the new hardware as well. While this approach frequently gets good results, and, if applied with caution, can save time and money while accurately verifying a design, it must be approached with caution and maybe even a little suspicion, especially in the world of homebuilt aircraft.

The reasons we have to be a little suspicious are twofold. First, the results of failures in the aircraft world can be very serious, and second, in the world of homebuilt, “custom” aircraft, the sample sizes (numbers of a particular aircraft or component) are small enough that statistical analysis might very well be inaccurate due to insufficient data points. Call me old fashioned—you can even call me a Luddite—but I am a believer in testing equipment and vehicles in the field to prove their capabilities.

The Space Shuttle Launch Pad Escape baskets were an “E-ticket Ride” if there ever was one. Theory said they would whisk a person from the top to the bottom safely, but until they were tested, no one was sure. They were only tested with human occupants once, and that was enough.

Skin in the Game

In the early days of aviation, testing was the only way to prove an aircraft, and it involved a pilot strapping on the airplane and going flying. Proving the design was pretty much done by trying a few maneuvers. If the machine came back and the pilot survived, it was on to more rigorous testing, until the airplane proved capable of its designated mission. In those days, a design didn’t last very long—it was superseded in months by something more advanced, stronger and better. The pace of development was just that fast. So if there was a problem, or an aircraft was lost, it wasn’t as big of a deal as it is with modern development programs costing billions of dollars and consisting of only a very few airframes. Components are likewise costly to test because of each item’s accumulated cost—hence the desire to prove them by analysis rather than test.

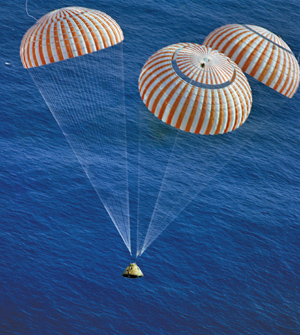

The Apollo spacecraft returned to earth via parachutes, and they were tested with boilerplate mockups many times before humans rode them home from space.

One of the reasons that the Apollo program was able to successfully land men on the moon in a short period of time was a philosophy that espoused testing. Cost was almost literally no object, and redundancy was not an option, due to weight and performance limitations. That meant that every part of the spacecraft had to be very reliable—it had to work, and the people flying had to know it would work.

Many components were sacrificed on the altar of destructive testing so that the operators would know exactly how far they could push the edge of the extremely thin envelope. Modern aerospace vehicles and programs are much more limited in resources, and therefore people are less inclined to destroy equipment in labs and on test stands; hence the need for computer modeling. But the kicker is that the models are only as good as the equations used to describe the physical reality of the devices being tested, and they can only be truly trusted if those models are verified by testing! That’s why modeling techniques that might be acceptable for items and systems that will eventually be mass produced, yet those used for custom gear and situations have a built-in fault—the lack of ability to prove them by testing.

It has been said that the Space Shuttle Columbia was almost worn out (from testing) before its first flight. In fact, over its lifetime, many more test hours were put on the vehicle than flight hours.

Here on Earth

So what does all of this mean to the average kit or homebuilder? Well, it prompts a number of questions that you should ask about the designs you are considering for your next build. How has the design been tested? Has the structure been loaded to destruction? How much of the design has been accepted by computer modeling and analysis versus test? A prospective builder can get an idea of the philosophy of the design team by looking at how many aircraft of that model that team has built. Is there only one in existence, or are there many built and flown? (That number is difficult to obtain. Just ask those who gather data for the KITPLANES® Buyer’s Guides every year.)

This same philosophy can be used for aircraft components—engines, avionics and other equipment. I have an easy way to measure my confidence in an engine-development program: I ask how many engines of this type they have built and how many thousands of hours of run time they have on an aircraft. In fact, I generally ask to see the development team’s airframe—the one they use for testing. If they don’t have one and are using potential customers’ airframes instead, I have to wonder just how resource-limited they are, and this leads me to believe that the test program is probably a shoestring affair.

Engine components are in a class of equipment that is ripe for extensive, methodical testing. Heat, vibration and extreme stress can all be found in an engine compartment, and hours and hours of real-world run time has proved time and again to be the only way to build robust hardware. The lowly ignition magneto has proved itself over a century of aviation, but not without many development tests and millions of hours of time in service that lead to developments such as pressurization for high altitude flight. In the past two decades, electronic ignition systems have been developing with great success, but not without a lot of blood, sweat and tears on the part of the developers and testers. I can’t think of a single one of those systems that worked perfectly from the start—rather, they have matured and gone through growing pains as weaknesses were discovered, components were beefed up and/or redesigned and more testing was performed to prove the upgrades. Several of those systems are now out there on the market and fairly reliable, but it has taken years to get them there—just as it took years for those magnetos to reach the point where we don’t think much about their reliability.

Avionics testing today encompasses both hardware and software. There is nothing like a good old shaker table to discover flaws in electronics boxes intended for aircraft use. It is rare that an electrical component brings the system to a screeching halt; rather, it is a connection, an exterior fastener or a loose wire or solder joint that can only be found by testing. Electronics are almost always perfect when analyzed on paper—the real world discovers that hot spots appear in a box that can affect critical components in a way no one has predicted. Testing is the only way to find this out.

In the same way, software needs testing. Run time is important (how long does it keep going between resets and/or reboots?), but a well-thought-out test program will also probe every logic path to see if the programs can be tripped up or brought to their knees. Bench testing is good, but in-flight beta testing is generally the only way to really find the faults. It surprising to see just how many defects and errors are uncovered (and fixed) in even the most extensively designed software systems by actual field testing. It is often a simple case of the design team not being able to anticipate “field issues” until the software mixes with the hardware in the field.

Remember: If it hasn’t been tested, you don’t know that it will work!

New equipment, devices and components hit the market for Experimental builders almost every day. Some are from large manufacturing giants and others are turned out by homebuilders themselves in garage workshops, to be sold one at a time over the Internet. Some require little testing because their failure would have few consequences to safety or mission success. Others have a lot to do with the pilot’s safe return to earth, and for this I would demand to see how well, how long and to what extent they had been tested. The old saying goes like this: If you want to know what the engineers think it will do, check the analysis. If you want to know what it will really do, test it!

Just because the equations say a piece of equipment or structure can withstand such and such a load before breaking doesn’t mean as much as knowing that this has been proved true. Testing costs money—there is no doubt about it. But for critical components, testing is often the only way to be sure of where the limits really are. Whether you’re going to the moon or simply across your home state, the confidence of knowing how much your equipment will take before letting you down is priceless.