Much of the content of this article is based on the author’s upcoming book “A Cognitive Avionics System with an Embedded Conversational Agent.”

In multi-crew aircraft, the pilot has considerable support from the copilot, who handles many routine tasks, such as reading the checklist, changing radio frequencies, monitoring systems and communicating with air traffic control. In single-pilot operations, when all is well, these tasks are easily performed by the pilot. But we have all seen otherwise boring flights that suddenly became very busy, sometimes critically so.

Many approaches have been pursued to lessen the burden on the single pilot. The DARPA (Defense Advanced Research Projects Agency) Pilot Associate program was one. Several articles have appeared in various aviation magazines over the years describing the idea of a virtual copilot. Brien Seeley, president of the CAFE Foundation, wrote an interesting article for the June 2007 issue of KITPLANES® outlining his concept: “Come Fly with Me—the eCFI.”

The primary efforts to date by avionics manufacturers have been on improving the graphics and mode-selection button sequences. But the pilot must still manually interact with the avionics systems. If the pilot is already taxed with flying the aircraft (hands and eyes busy), then finding a checklist (paper or electronic) may become challenging.

Over time, many have come to realize that the first step to smarter avionics is a more natural interface. The use of buttons, keypads, touchscreens and knobs may seem natural to us today because of the computer revolution, but this is not an innate skill. Furthermore, remembering how to display a particular chart or checklist using key sequences may be problematic during a stressful event. This is especially true in single-pilot operations.

The most natural communication interface for technical information during times of duress is spoken language and graphics. Being able to ask the avionics to read a checklist or display a chart, and having it do so interactively, begins to approach the convenience of having an actual copilot.

By now you may be thinking, or should be, of HAL in the film 2001: A Space Odyssey. Indeed, sans the paranoia, HAL would make an excellent virtual copilot. Technology has not progressed to quite this point yet, though the debut of IBM’s Watson on the television show Jeopardy was impressive. But even if Watson’s performance were comparable to HAL’s, there are few aircraft that could carry the room full of computer servers that Watson requires. (Incidentally, Watson had no understanding of spoken language. The questions were provided in electronic format.)

Readers who have used the iPhone Siri application have surely wondered how to have something similar in their aircraft. Perhaps one day this will come to pass. But Siri has one major shortcoming: It must be connected to the Internet to function. Like Watson, Siri resides on a server farm, not in the iPhone, and the iPhone handset does little processing of the voice requests.

On modern commercial transport aircraft, a limited number of aural speech warnings are available. One of the earliest examples is the Terrain Avoidance Warning System’s (TAWS)sharp announcement “Pull up! Pull up!” accompanied by a warning horn. Later, the simple advisory prompts for minor deviations from altitude, airspeed, heading, etc. were implemented. These came to be known among pilots as “Chatty Cathys,” along with other less printable names.

However, the fictional HAL could both understand and speak. In the last 10 years, Automatic Speech Recognition (ASR) has made remarkable progress. It is now at the point where it can be used in a limited way in avionics. Indeed, Garmin has recently released the GMA 350 audio panel with some voice-control capability. VoiceFlight has a system for entering waypoints into the Garmin GNS 430W/530W using voice, and the F-35 Joint Strike Fighter will have some voice control of systems.

Furthermore, automobile manufacturers now have systems that far exceed the limited capability of aircraft systems. A notable example is the Ford Sync System. The system to be described here shares much of the general architecture of the Ford Sync System and other automobile ASR systems. There is a speech-recognition module, a text-to-speech module, a natural-language parser and task manager, a vehicle-system interface and the capability to display requested graphical information. The automobile designers are leading the way. One can hope that avionics manufacturers will leverage automobile designer successes into smarter avionics.

The systems discussed in this article are self-contained, and, while limited in capability, they point the way to the future of avionics.

Cognitive Pilot Assistant Overview

This is a working, first-generation virtual copilot that we call the Cognitive Pilot Assistant. The CPA will interactively read checklists, provide overall system status or individual system status (e.g., fuel quantity), time, announce out-of-tolerance systems (e.g., low fuel quantity) and change the frequencies of radios that are configured for remote control. While the system is not truly cognitive, the functions it performs often seem to be. And we may expect that future systems will seem more and more so.

The device itself is a relatively small box containing a single-board computer (SBC). It is connected to the audio panel for voice input/output (I/O) and to a source of aircraft system data. The aircraft systems data is often available from the engine-monitoring avionics designed for amateur-built aircraft. Several avionics manufacturers offer a serial data output that is suitable.

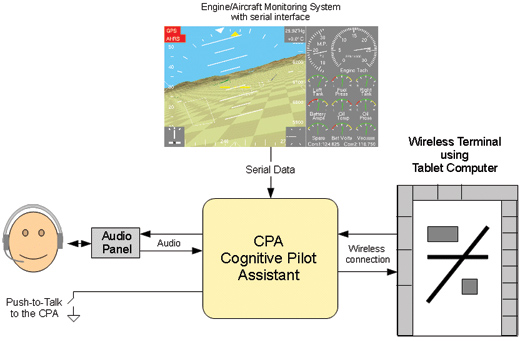

Figure 1 depicts a top-level view of the installation. As you can see, the interconnection to the aircraft avionics is straightforward. Aside from power and ground, there are three other connections: two wires to a push-to-talk (PTT) switch, two wires to the aircraft audio panel and a serial cable connection to the existing engine/aircraft monitoring system.

Figure 1: Cognitive Pilot Assistant overview.

Operation

Operation of the CPA is through voice commands. For example, the simplest request is “Say time.” The CPA will then respond “The time is hh hours, mm minutes and ss seconds Zulu.” The word “Say” is not even required; the request “Time” works equally well. To minimize random responses, the PTT switch must be pressed while making requests.

Checklists are requested in a similar manner. Simply press the PTT switch and say “Engine start checklist.” The CPA asks for confirmation by stating, “Engine start checklist.” The pilot presses the PTT switch and says “Check.” The CPA will then read the checklist interactively, checking sensor readings where possible.

A typical checklist session might go as follows:

Pilot:“Engine start checklist.”

CPA:“Engine start checklist.”

Pilot:“Check.”

CPA:“Master on. Three green.”

(The master switch should be on at this point for the CPA to work. Three green refers to gear lights on retractable gear aircraft.)

Pilot: “Check.”

CPA:“Battery voltage is normal at 11.7 volts.”

Pilot:“Check.”

CPA:“Fuel on mains.”

Pilot:“Check.”

CPA:“Left fuel quantity is normal at 23 gallons. Right fuel quan-tity is normal at 24 gallons.“

Pilot:“Check.”

At any point in the dialogue, the pilot can request the CPA to say again the last response. For example, if the CPA states a different quantity of fuel than the pilot sees, the pilot may request “Say again,” which will prompt the CPA to repeat the fuel quantity it senses.

It is actually not necessary for the pilot to say “Check.” If the PTT switch is held depressed briefly, the CPA will assume that “Check” is intended.

While the CPA is awaiting a request from the pilot, it checks the system readings periodically. If a system reading is out of tolerance, the CPA will announce, for example, “Attention Captain! Fuel pressure is low at 1.5 psi.” This may be especially useful when the pilot might be distracted by radio communications. If necessary, the CPA is readily silenced by deselecting it on the audio panel.

The checklists are created by the pilot and stored in the CPA as text files. Any text editor should be able to generate these files. The CPA comes with generic checklists that may be edited by pilots for their specific aircraft.

Some Operational Details

Microphone. A good quality, noise-canceling boom microphone is important. As good as today’s ASR engines are, they still do not do well in high-ambient-noise environments. Fortunately, the microphone requirement is met with a typical aviation-quality headset/microphone combination. The microphone mechanically achieves noise canceling through the use of a differential microphone element. That is, the ambient noise striking both sides of the element does not produce an output. Voice sounds impinging on only one side act differentially on the element to produce a voltage proportional to the voice sounds.

Wireless Terminal. Although not essential to the operation of the CPA, a Tablet PC is useful for displaying charts, the text of checklists, a backup SVS/system monitor, etc. (A laptop is more suited for CPA system maintenance such as updating checklists and other files.)

As a minimum, the tablet should have a Java-enabled browser, through which the CPA will be accessed. There are also apps that can provide access via WiFi or Bluetooth. The CPA/tablet link is secure, so a bit of setup is required before use.

CPA Software Details

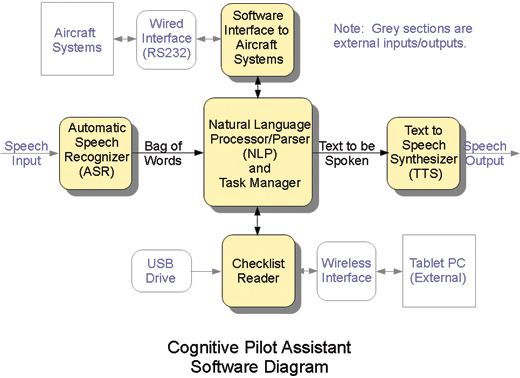

Figure 2 is a top-level block diagram of the CPA software.

The Natural Language Parser (NLP) and Task Manager (TM) perform the heavy lifting. The details of the NLP and TM are beyond the scope of this article, but here’s a general picture.

The NLP accepts the “bag of words” from the ASR and parses them into a form for action. The action is then passed to the TM to be implemented.

Figure 2: Cognitive Pilot Assistant software diagram.

For example, requesting a checklist causes the TM to send the request to the Checklist Reader module. The Checklist module then searches for a text file corresponding to the requested checklist. Next, this text file is sent line by line to the TM and on to the TTS module. The pilot must acknowledge each line with “Check,” “Say again,” “Skip” or “Stop.” Skip marks the item as unchecked, and the pilot will be prompted at the end of the checklist for these items. Stop terminates the reading of the checklist and returns control to the NLP/TM. As mentioned, the checklists are text files created by the aircraft owner.

The aircraft systems are continuously monitored for out-of-tolerance readings. If such a reading is detected, an alert is sent to the TM for transmittal to TTS. Out-of-tolerance limits are also text files created by the aircraft owner.

Conclusion

While the CPA is primitive compared to Arthur C. Clarke’s HAL 9000, his vision is driving future development in the technology. There will come a day when talking to our aircraft will be as natural as talking to a real copilot. And this virtual copilot will handle radio communications, altitude/heading changes and more using computer speech technology. The Cognitive Pilot Assistant is just version 0.1.

For more information on James Hauser’s work and his forthcoming book, visit www.aerospectra.com.