The Space Shuttle flight-control systems included backup computer software that functioned the same as the primary system, but was written by a different company so that it wouldn’t have the same bugs.

I guess you can call me a pragmatist. I prefer to think of myself as a realist. But mostly, I like to think of myself as what I really am—a flight operations engineer. Operations engineers are different from design engineers (we’re right, and they’re wrong). Seriously, though, different doesn’t signify better or worse; it just means different.

I got to thinking about this one day after reading a post on an EFIS Internet group that I help moderate. The poster had found a bug in his software, and he hoped the manufacturer would fix it soon because he didn’t feel as though he could depend on the EFIS until it had been repaired. He didn’t say what the bug was, so it is hard to evaluate whether it was a “safety of flight” issue or merely an ancillary function that wasn’t working—or if it just was not working right. (I am not denigrating the poster; each of us has our own comfort level.) But the concept of expecting software to be bug free was, I realized, a significant difference between designers and operators.

The Bug-Free Myth

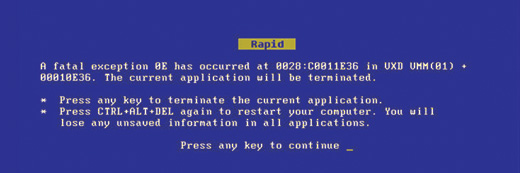

You see, I firmly believe that bug-free software is a myth, not unlike unicorns, Bigfoot and the lost continent of Atlantis. It’s not that we don’t strive for and demand quality and perfection, it’s just that I have never met software (including extremely high-reliability aerospace software) that wasn’t able to surprise me. I have been flying man-rated aerospace machines for more than 25 years, and I can assure you that I have encountered my share of bugs that the designers would have sworn were impossible. I have had computers grind to a halt; I have had systems go into looping dilemmas; and I have seen the ever-popular “stack overflow” any number of times. The cause is different in every instance, but almost without exception, the problem occurred just as we had gotten into a corner of the software world that no one had ever considered. Bugs happen and, no, they cannot be reliably predicted. Vast statistical studies are performed on expensive weapons systems to show how remote the chances of such a problem might be, but the number is never zero.

The dreaded blue screen. The last thing you want to see on your cockpit computer is an error message telling you that it has locked up and needs to be rebooted.

Overlooking the Obvious

The frustrating thing about these bugs is how obvious they look once they have been found. A few years ago, a flight of the most advanced fly-by-wire fighter jets in the United States military crossed the International Data Line out over the Pacific Ocean, and something in the computers—which control virtually the entire airplane including the radios—divided by zero as the longitude changed from +180 to -180, and the whole thing locked up. Fortunately, the flight was accompanied by tanker aircraft. The flight controls had a reversionary mode, and the quick-thinking flight lead used his emergency radio to contact the tankers, which led him back to Hawaii. In retrospect, crossing such a significant mathematical anomaly should be an obvious thing to test, but no one thought of it.

So what do we do about it? Do we refuse to fly until they are all found and fixed? Nope, that is a logical impossibility, just like expecting to find a perpetual motion machine. You can’t find something that may or may not be there, and you don’t know what it would look like. Enter the operational engineer’s perspective. “OK,” we say, “let’s just assume that we are going to have bugs. The question is not how we prevent them (we aren’t the designers), but rather what we’ll do when they hit us.” Note that I didn’t say if, but when. You plan for the worst case and have a backup plan. You may not even experience a complete computer lockup; bugs can be more subtle, for example, when the computer keeps running but slowly gives you faulty information.

The F-22 Raptor is an example of an all-electronic aircraft that suffered a significant software error when it encountered the International Dateline—something no one had thought about during development testing.

Risk Management

It all comes back to risk management. I simply don’t expect the EFIS in any air or spacecraft, certified or Experimental, to be perfect. But I do expect the overall system design to have a backup and acceptable reliability. The AHRS goes belly up? Have a spare one. Display unit freezes? Have a spare display, or backup instruments. Autopilot goes on strike? Be ready to hand-fly to safety. If we refuse to fly a system until it is proven to be bug free, then we will never fly the system. You just can’t prove that something isn’t there, at least not in the real world.

I frequently run beta software for manufacturers of the various avionics boxes in my airplane. Beta software is a pre-release version used for testing, and by its very nature is expected to have a few bugs. It is the tester’s job to try and discover them. For safety reasons, I always run “released” software in at least one of my EFIS displays and AHRS units. That way, if the beta version decides it is time to start acting up, I can turn it off and use what I know (to a degree of certainty) should still work. And if that fails? Well, I go to Plan C. I always try to have a third option.

A well-thought-out glass cockpit will have multiple independent devices with different software. In this case, the EFIS, autopilot and ADI all work separately to come up with the same answers for the pilot.

I’ll go out on a limb and state that all of the popular EFISes have, or will exhibit, bugs of some kind. The major reliability-related ones have most likely been fixed in testing. Minor, subtle bugs are still there for us to find. The answer is to design your overall avionics package to provide you with unrelated redundancy. In most highly integrated, fly-by-wire aerospace vehicles, there is a reversionary system of some sort that keeps the pilot connected to the flight controls if the primary software system goes kaput. In my airplane, I have an autopilot that can run independently of the EFIS, and is from a different manufacturer. In essence, I am planning on seeing failures, on finding bugs. But good risk management practices keep those bugs from being fatal. I appreciate the hard work of avionics and software designers who strive for perfection. I just believe that perfection is unattainable by human beings, no matter how hard we try. At some point, we just have to go fly!

![]()

Paul Dye Paul Dye is an aeronautical engineer and multi-time builder. He currently flies a Van’s RV-8 and, along with his wife, Louise, is building an RV-3.